High-performance computing (HPC) traditionally involves clusters of compute servers deployed in dedicated facilities. In recent years, demands for scale and performance have pushed HPC from on-premises to the cloud - a transition that offers new opportunities as well as significant hurdles.

On the positive side, the cloud offers virtually unlimited on-demand computing and storage resources because cloud platforms provide elastic capacity that can grow (and shrink) dynamically when resource demands change.

There are economic benefits to moving to the cloud, although not always. A stable workload that runs 24x7x365 for several years may be cheaper to run on dedicated hardware (cloud service providers have responded to this case by offering bare-metal instances). The pay-as-you-go model eliminates upfront capital expenses and avoids the costs associated with unused compute cycles. The range of compute instance types available in the cloud allows users to pay only for what they need, when they need it.

However, running applications in public clouds introduces new challenges. Each cloud service provider has an extensive catalog of services, and each service has a wide array of features. Without the knowledge required to configure and optimize cloud services, the researcher may encounter unpredictable or unsatisfactory application performance, unexpected costs, or unnecessary security risks.

This is where Memverge’s Memory Machine Cloud (MMCloud) can help. MMCloud is an easy-to-use cloud automation platform designed for scientific computing and data science. MMCloud simplifies the deployment of data-intensive pipelines and interactive computing applications in the cloud through automation and containerization.

Containers are the key technology that enables application mobility and automates the deployment of applications, at scale, in the cloud. The container includes all the software dependencies and runtime libraries needed to run the application in a reproducible way. But container deployment requires a cloud orchestration platform to schedule, instantiate, and manage containers. MMCloud provides an intuitive GUI (as well as a CLI) that allows the non-cloud specialist to deploy complex workflows as containerized applications in the cloud.

There are many reasons why scientists rely on MMCloud to enhance their research capabilities. Here are the top five reasons why you should choose MMCloud as the platform to run your cloud workloads.

1. Seamless Expansion of High-performance Computing to the Cloud

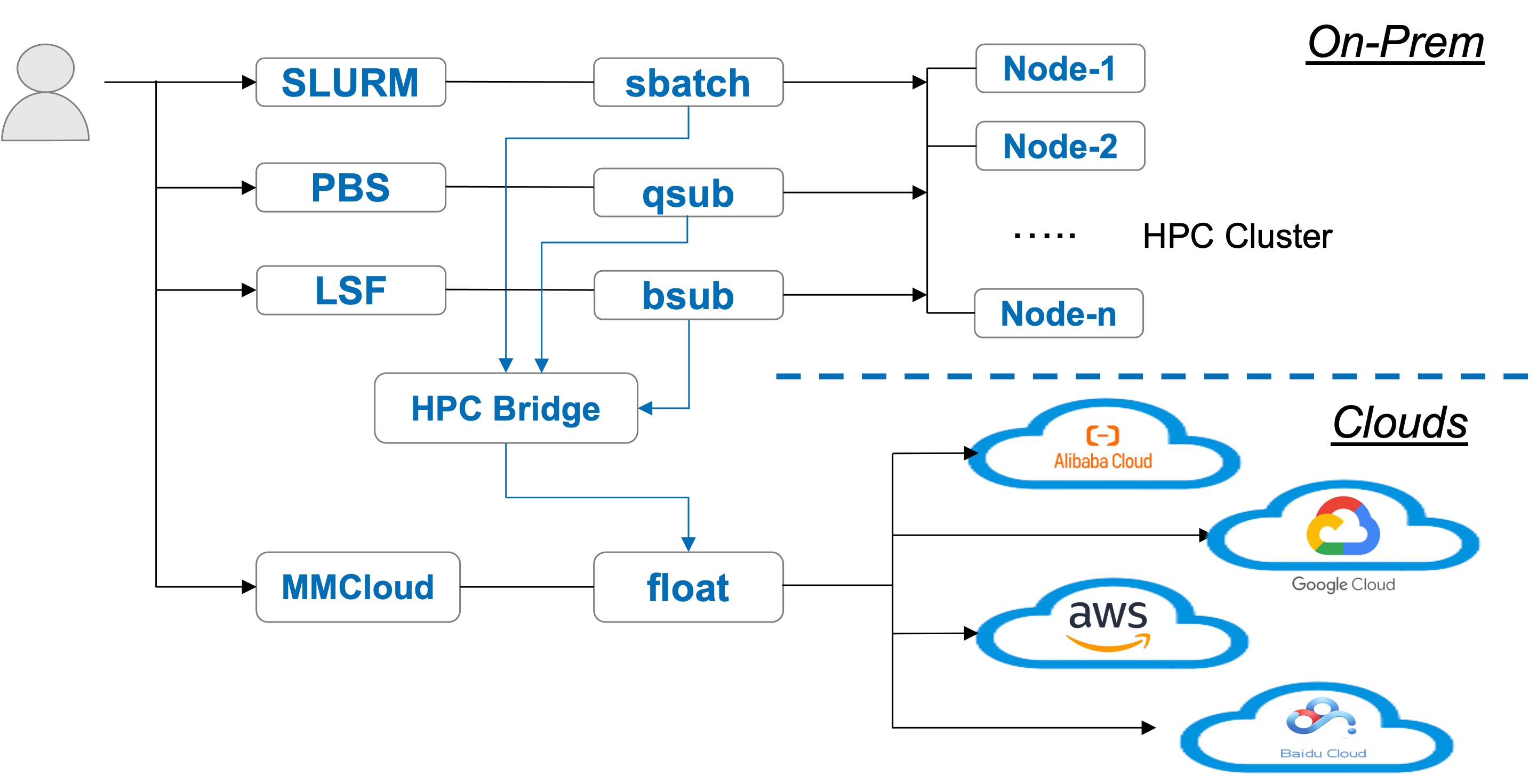

Hybrid cloud (a combination of on-premises and cloud resources) is a popular architecture for HPC because of its flexibility and scalability. When demand for computing resources exceeds what is available locally (on-premises), applications can “burst” to the cloud.

MMCloud effectively augments the local HPC environment with public cloud by enabling the user to launch jobs in the cloud using the same familiar HPC job managers such as SLURM and PBS. This way, scientists can operate in an efficient hybrid environment. Containerization and automation are a core feature of MMCloud. MMCloud automates deployment of containerized applications into the clouds, and it automatically deletes cloud resources after a job completes, removing the risk of unattended resources adding to the monthly cloud bill. Interactive sessions can be hibernated and restarted when needed again.

Figure 1 MMCloud expands HPC to clouds

2. Insightful Monitoring of Cloud Workloads

Some users are reluctant to move workloads to the cloud because of a concern that they will have limited insight into, and control over, how their applications run in the cloud. Will the job complete in a shorter or longer time? Are the resources over- or under-provisioned?

Visibility into workload performance and resource utilization is critical when operating in the cloud. Granular monitoring of resource usage and costs can identify opportunities for optimization through rightsizing, shutting down idle resources or shifting workloads based on the current prices of compute instances. While cloud service providers do offer monitoring tools, these tools are not always easy to use and often come with additional costs.

MMCloud provides a comprehensive solution with advanced monitoring and metering capabilities, ensuring that scientists have a detailed understanding how their workloads run. CostReporter gives you a summary how much you spend on the jobs running in the cloud. WaveWatcher makes it easy to see the real-time consumption of resources including CPU, memory, storage, and network transfer speeds. For example, an application may exceed its storage limits or crash because of insufficient resources. Issues like these can be identified using MMCloud’s graphical interface, making troubleshooting much more straightforward.

MMCloud generates event and performance logs at both the job and host (worker node) level. Logs can be viewed in real-time to track the progress of a job as it executes, or downloaded after a job completes to identify issues or errors. The monitoring features enable researchers to fine-tune their environments for maximum efficiency.

Figure 2 MMCloud WaveWatcher provides insights to cloud workloads

3. Reliable Spot Instances

Almost all service providers offer spot Instances as a way for users to purchase excess computing capacity at a significantly lower price than on-demand instances (discounts can be as much as 90%). The trade-off is unpredictable availability. The cloud service provider can reclaim these instances with nominal warning (usually two minutes or less). Spot instances are ideal for stateless applications and can be used in stateful, batch-oriented pipelines if the workflow manager can requeue any failed jobs, although the total cost and wall clock time become unpredictable.

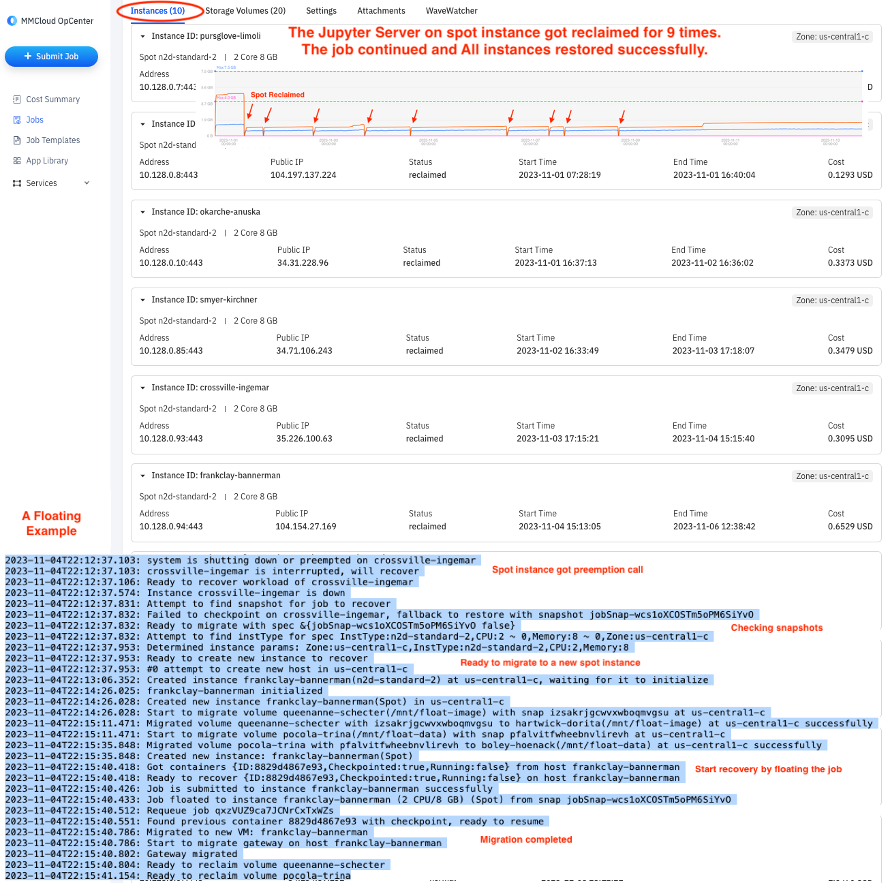

MMCloud's SpotSurfer feature allows stateful applications to always run to completion by "surfing" to a new VM instance if the underlying Spot Instance is reclaimed. Customers can realize the cost savings of spot instances without the risk of job interruptions. The technology that makes SpotSurfer possible is AppCapsule. AppCapsule creates a snapshot of the in-memory state as well as file system data. AppCapsule is triggered automatically when the cloud service provider signals that it is reclaiming the spot instance. Job execution pauses and then resumes on a new spot instance.

For interactive sessions, MMCloud provides a reverse proxy to ensure that each interactive session appears to clients as an IP address that does not change even if the session moves to a different virtual machine with a different IP address.

Figure 3 MMCloud SpotSurfer protects workloads on spot instances

4. Cost Control

Cost management is a major concern for researchers, many of whom are grant-funded with strict budget constraints. While cloud services can be cost-effective, they can also lead to unpredictable expenses if not managed carefully. Institutions must develop new financial governance models to control cloud spending, ensuring that costs align with research budgets. Managing costs at cloud scale demands continuous monitoring of cloud resource usage to guard against runaway expenses. MMCloud’s Job Cost Reporting tools allow for precise budgeting, and the SurfZone feature provides real-time enforcement of limits on cloud spending.

Lifecycle management of cloud-based workloads can be critical for cost control. MMCloud provides control over of resources, for example, by enforcing automated schedules for job startup and shutdown, and maximum job execution times. This level of control is essential for managing costs in group settings where multiple users may run concurrent experiments. There are actions that individual users can take, for example, canceling jobs, moving jobs to smaller (cheaper) instances, or suspending interactive sessions. These actions can result in saving cost and can be used for avoiding idle charges.

Performance can be critical for cost control too. When jobs run on the spot instances, the slower jobs in data pipelines have a higher chance of getting reclaimed and the time and expense penalty for starting over might be huge. MMCloud’s integration with third-party high-performance file systems has improved the efficiency of file operation and has shown a significant advantage for Nextflow workflows.

WaveRadar, an interactive dashboard created on mmcloud.io is a free FinOps tool for viewing historical spot EC2 prices across all major AWS regions. WaveRadar lets you identify the regions offering the lowest rates for a given instance or instance family.

5. Rightsizing Cloud Resources

Compute instances available from cloud service providers range in size from one vCPU and 500 MB memory to 192 vCPUs and 1.5 TB memory (and even more in some specialized instances). Choosing the optimal cloud resources for scientific workloads is a complex task. Sizing for the peak load is safe but inefficient. Sizing for the average load reduces the cost but can lead to unacceptable performance or even out-of-memory (OOM) errors.

MMCloud's WaveRider feature simplifies this process by removing the need to select a single instance for each task. WaveRider provides the capability to migrate running jobs to optimally sized virtual machines based on their real-time resource utilization.

Job migration can be initiated in three ways: manually, driven by policy, or programmatically. At any time, you can manually migrate a job to a specific instance type, or to an instance whose capacities you define. With a rules-based policy, WaveRider monitors the resource utilization of your workload. If the upper threshold for CPU or memory utilization is crossed for a specified interval, the job is migrated to a virtual machine that has more virtual CPUs or more memory. Similarly, if the lower threshold is crossed and utilization remains low for a specified interval, the job is moved to a smaller virtual machine. For jobs with a well-known resource usage profile, job migration can be programmed into the job script so that the job migrates at predetermined stages in the execution.

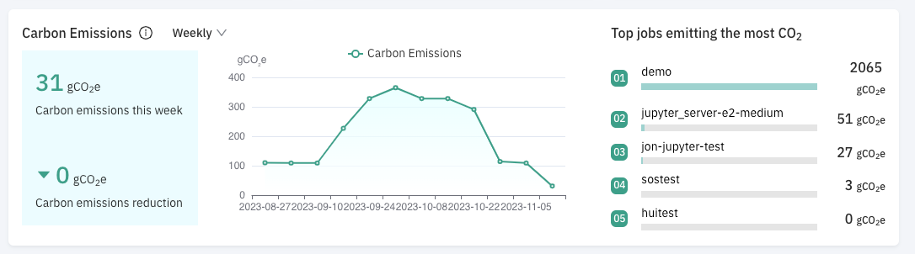

Rightsizing has a positive effect on the optimal allocation and sharing of cloud resources. The optimization of using cloud resources has a profound impact on environmental sustainability. Users who proactively release underutilized resources allow the cloud service provider to increase server utilization. Fewer servers running at higher utilization lowers operational as well as embedded emissions. In a world increasingly conscious of climate change, optimizing cloud resource usage is a step towards reducing the overall carbon footprint of the IT industry. MMCloud provides a carbon emissions dashboard so that users become aware of the impact of how they run their jobs in the cloud.

Figure 4 MMCloud Carbon Emission Monitor

Conclusion

Moving HPC workloads to the cloud, or bursting workloads to the cloud when needed, has benefits in terms of cost, scalability, efficiency, and sustainability. Challenges in usability and cost control in the cloud have prevented users from taking advantage of the cloud or a hybrid cloud architecture.

MMCloud is a powerful, yet easy to use, platform that facilitates the use of cloud computing by the research community. MMCloud is cloud-agnostic and is supported on AWS, Google Cloud, and AliCloud today. A free 30-day, no obligation trial is available. Register at https://mmcloud.io/customer/register today.